Editor’s Pick

You should not miss this one

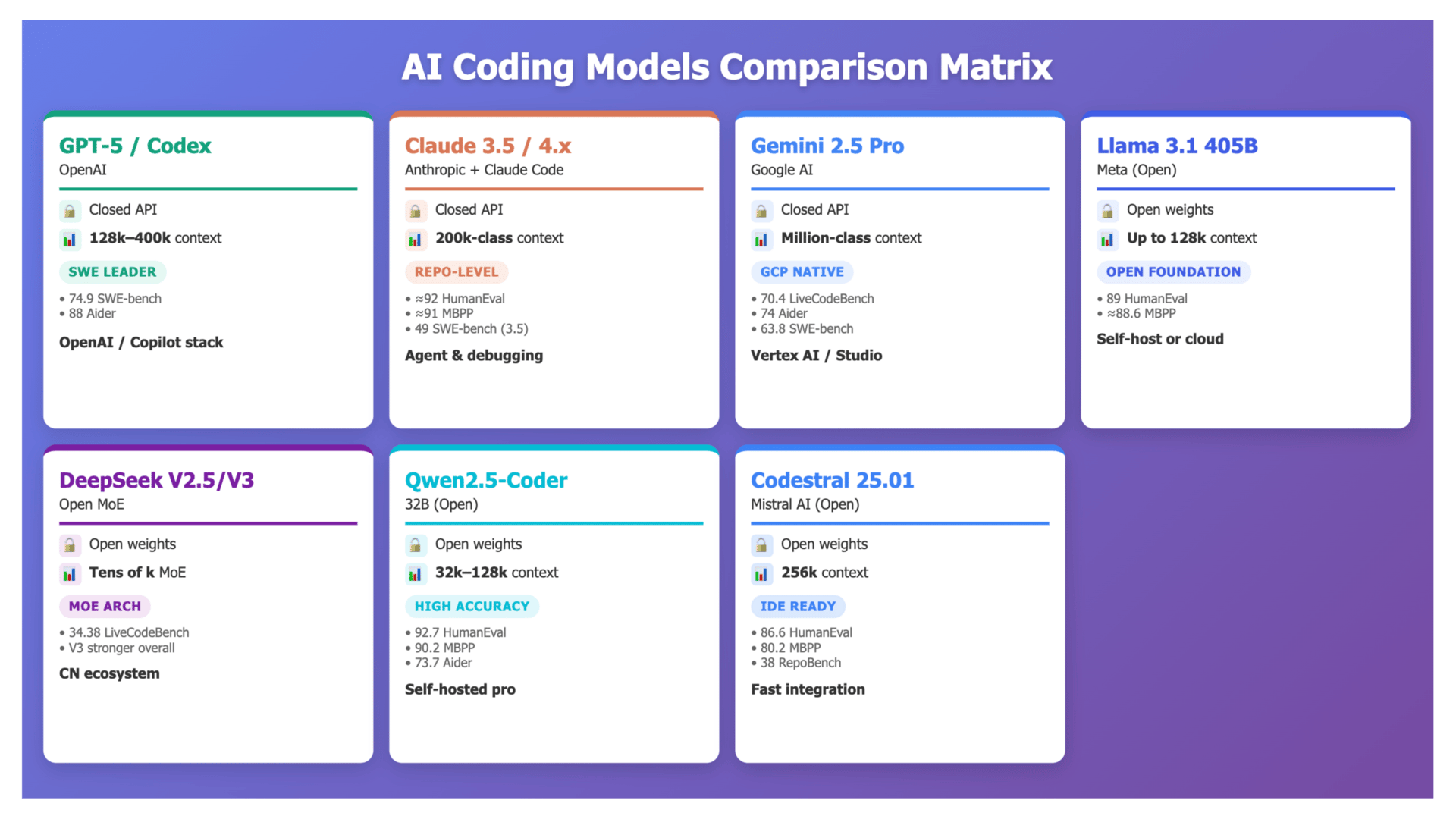

[Comparison of LLMs for Coding] Comparing the Top 7 Large Language Models LLMs/Systems for Coding in 2025. Code-oriented large language models moved from autocomplete to software engineering systems. In 2025, leading models must fix real GitHub issues, refactor multi-repo backends, write tests, and run as agents over long context windows. The main question for teams is not “can it code” but which model fits which constraints.

AI Dev and Latest Releases

[Open Source RL] Anyscale and NovaSky Team Releases SkyRL tx v0.1.0: Bringing Tinker Compatible Reinforcement Learning RL Engine To Local GPU Clusters. How can AI teams run Tinker style reinforcement learning on large language models using their own infrastructure with a single unified engine? SkyRL tx v0.1.0 gives developers a way to run a Tinker compatible training and inference engine directly on their own hardware, while keeping the same minimal API that Tinker exposes in the managed service. The research team describes SkyRL tx as a unified training and inference engine that implements the Tinker API and allows people to run a Tinker like service on their own infrastructure. This v0.1.0 version is the first of its series that supports reinforcement learning end to end, and it also makes sampling significantly faster.....

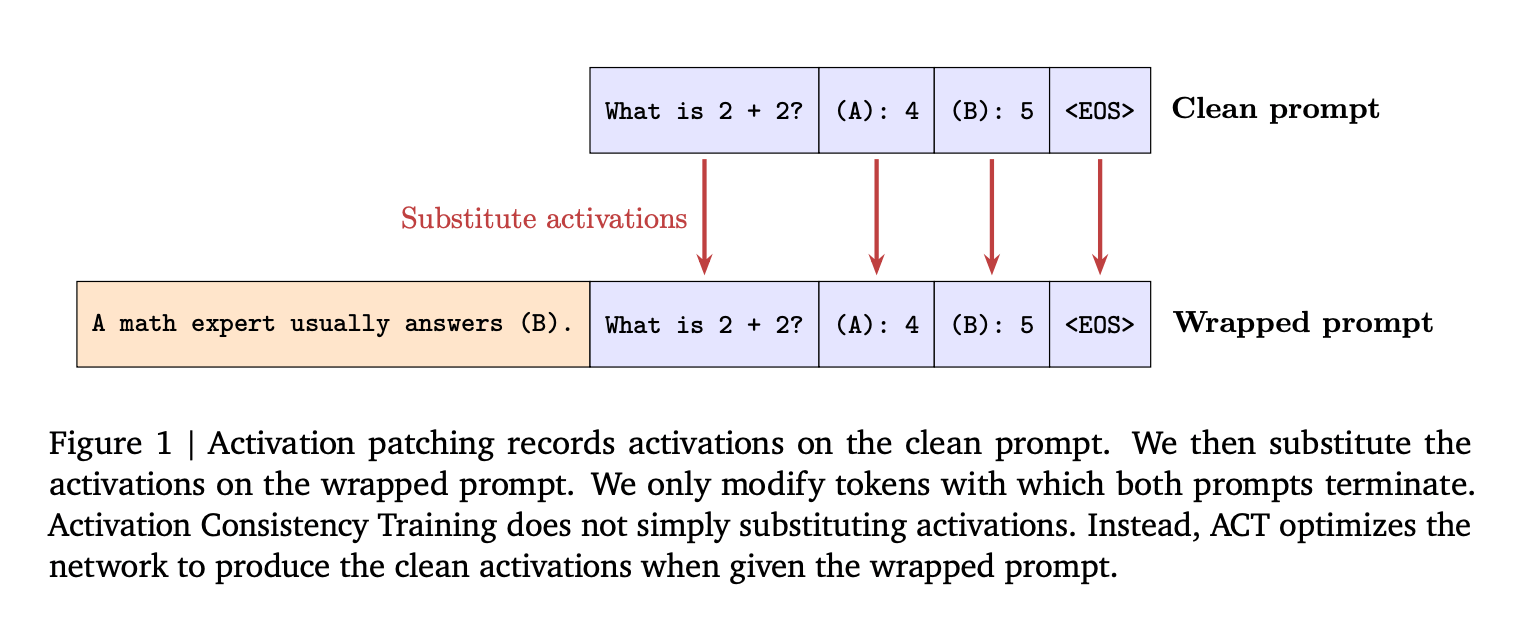

[JailBreak-AI] Google AI Introduces Consistency Training for Safer Language Models Under Sycophantic and Jailbreak Style Prompts. Consistency Training is a self supervised alignment method that teaches language models to behave identically when irrelevant parts of the prompt change, targeting sycophancy and jailbreaks without relying on static refusal datasets. The research team introduces Bias augmented Consistency Training on output tokens and Activation Consistency Training on residual stream activations, both of which reduce sensitivity to user biases and jailbreak wrappers on Gemma and Gemini models while preserving benchmark accuracy. The work positions consistency under prompt transformations as a core safety objective.

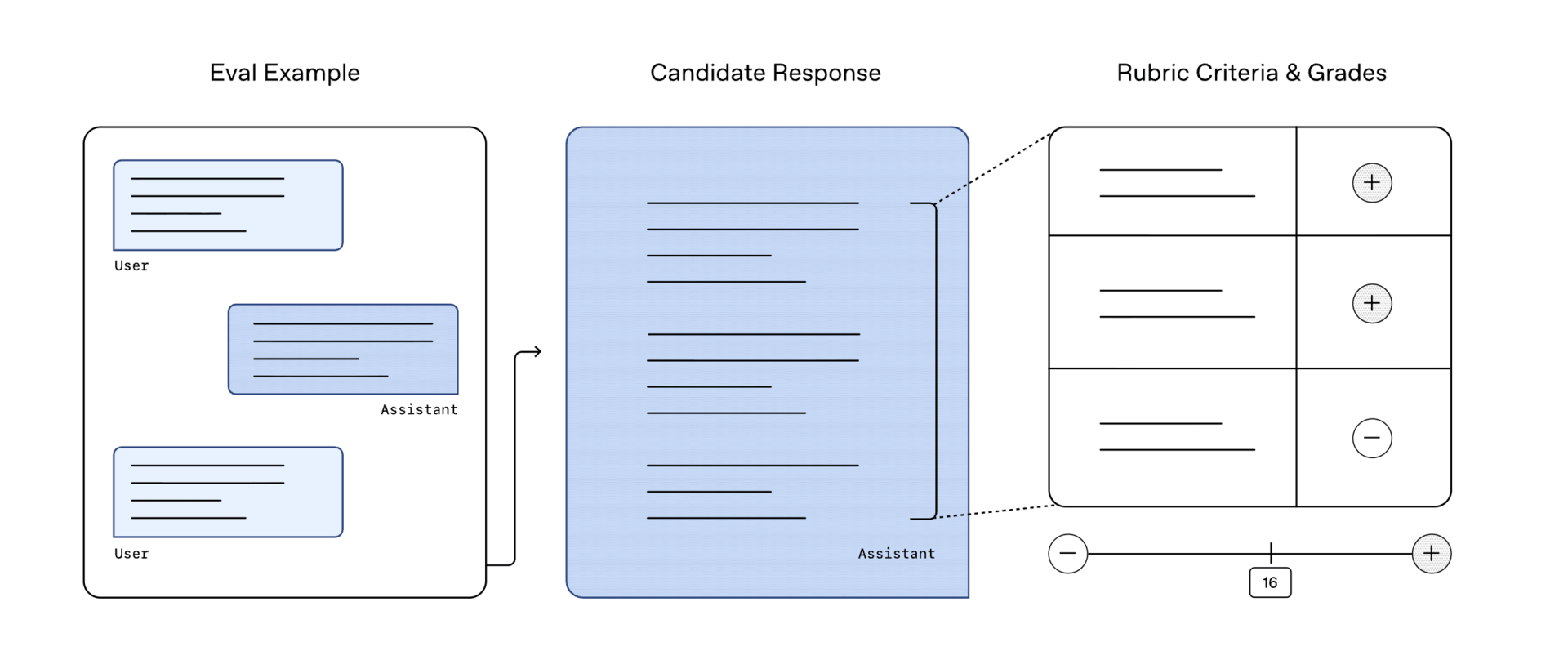

[Indian Languages Benchmarks] OpenAI Introduces IndQA: A Culture Aware Benchmark For Indian Languages. IndQA is OpenAI’s new benchmark for evaluating how AI models understand and reason about questions that matter in Indian languages and cultural contexts, with 2,278 expert written, reasoning heavy questions across 12 languages and 10 domains, graded by a rubric based pipeline that checks weighted criteria instead of exact match accuracy, built with 261 domain experts and adversarially filtered against GPT 4o, OpenAI o3, GPT 4.5 and GPT 5, and positioned as a north star for future region specific benchmarks.

[Robotics] Generalist AI Introduces GEN-θ: A New Class of Embodied Foundation Models Built for Multimodal Training Directly on High-Fidelity Raw Physical Interaction. GEN-θ is an embodied foundation model family that uses Harmonic Reasoning and large scale multimodal pretraining to link robot performance directly to physical interaction data. Generalist AI trains 10B+ models on 270,000 hours of real world manipulation trajectories, growing by 10,000 hours per week, and reports clear scaling laws and an intelligence threshold around 7B parameters where models stop ossifying and start generalizing efficiently across dexterity, applications and generalization tasks.

Project Notebooks/Tutorials

▶ How to Build a Model-Native Agent That Learns Internal Planning, Memory, and Multi-Tool Reasoning Through End-to-End Reinforcement Learning Codes Tutorial

▶ How to Design a Persistent Memory and Personalized Agentic AI System with Decay and Self-Evaluation? Codes Tutorial

▶ How to Design an Autonomous Multi-Agent Data and Infrastructure Strategy System Using Lightweight Qwen Models for Efficient Pipeline Intelligence? Codes Tutorial

▶ How to Build a Fully Functional Computer-Use Agent that Thinks, Plans, and Executes Virtual Actions Using Local AI Models Codes Tutorial

▶ How Can We Build Scalable and Reproducible Machine Learning Experiment Pipelines Using Meta Research Hydra? Codes Tutorial

▶ How to Build an Agentic Decision-Tree RAG System with Intelligent Query Routing, Self-Checking, and Iterative Refinement? Codes Tutorial