Hey folks!

How is your day going!

Lets dive into today’s newsletter. You can reach out to me directly for any suggestion/comments [[email protected]]

-asIF

AI Dev and Latest Releases

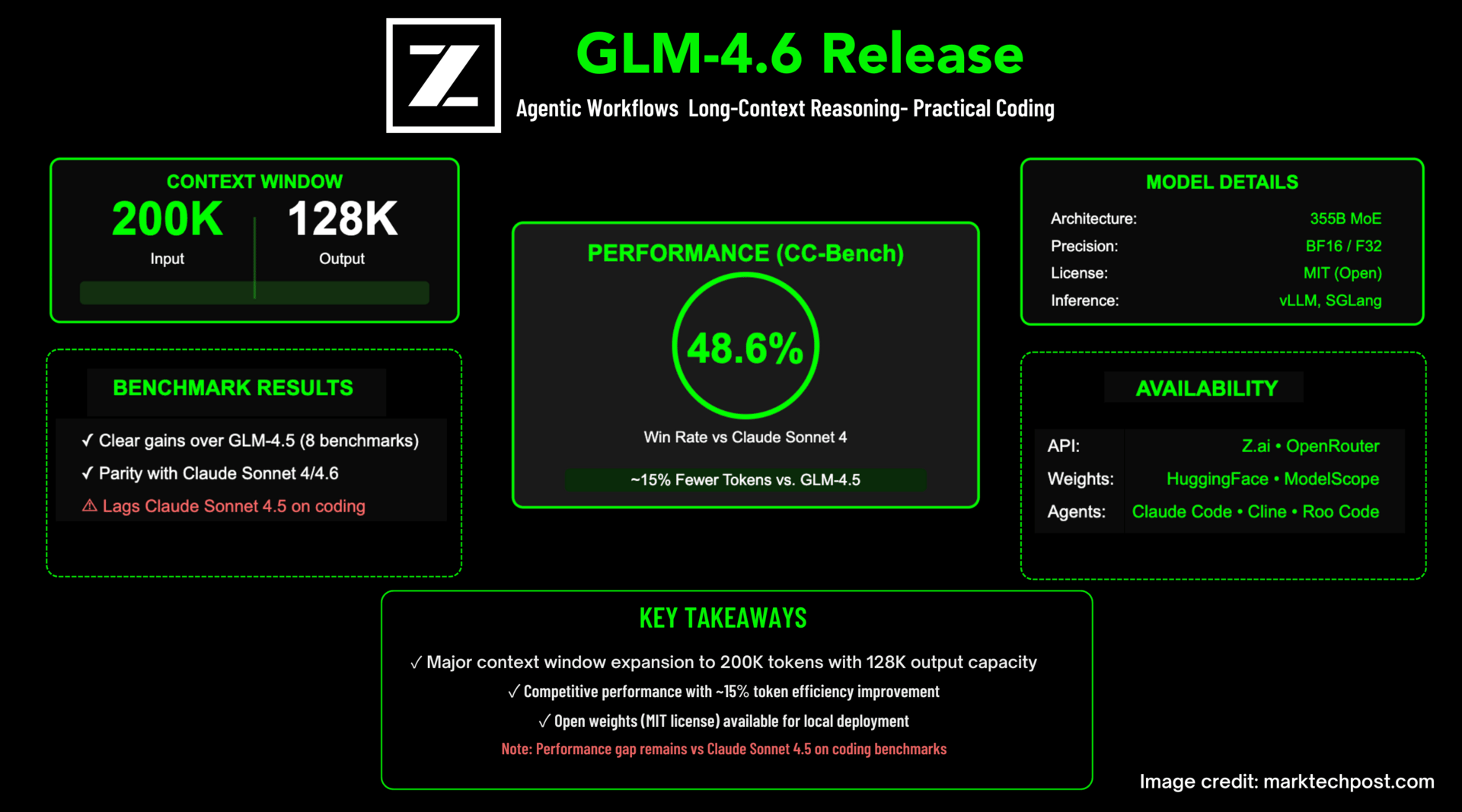

[Agentic Model + Open Weights] Zhipu AI Releases GLM-4.6: Achieving Enhancements in Real-World Coding, Long-Context Processing, Reasoning, Searching and Agentic AI. Zhipu AI’s GLM-4.6 targets long-context, agentic coding with a 200K input window and 128K max output (docs), reporting ~15% lower token consumption than GLM-4.5 on CC-Bench and near-parity with Claude Sonnet 4 (48.6% win rate) in human-evaluated, Docker-isolated tasks spanning front-end builds, tool creation, data analysis, testing, and algorithms (blog). Weights are published under MIT with a MoE ~355B-parameter listing on Hugging Face; local inference via vLLM and SGLang is documented (HF/docs). Public access is available through Z.ai and OpenRouter, which currently lists 200K context and pricing of $0.60/M input and $2.20/M output (platform-specific)

[Voice AI] Liquid AI Released LFM2-Audio-1.5B: An End-to-End Audio Foundation Model with Sub-100 ms Response Latency. Liquid AI’s LFM2-Audio-1.5B is a 1.5B-parameter, end-to-end speech–text model that extends LFM2-1.2B with disentangled audio I/O: continuous embeddings for input audio and discrete Mimi codec tokens (via an RQ-Transformer) for output. A FastConformer encoder and interleaved decoding enable sub-100 ms first-token audio latency under the vendor’s setup, targeting real-time assistants. On VoiceBench, Liquid reports an overall score that surpasses several larger models, alongside competitive ASR metrics, while preserving a single-stack pipeline for ASR, TTS, and speech-to-speech.

[Most interesting] Thinking Machines Launches Tinker: A Low-Level Training API that Abstracts Distributed LLM Fine-Tuning without Hiding the Knobs. Thinking Machines’ Tinker is a Python training API for post-training open-weight LLMs (e.g., Llama, Qwen, including MoE variants). You write explicit loops using low-level primitives; Tinker executes them on managed distributed GPUs and handles orchestration/fault tolerance. Current support is LoRA (not full FT), with a documented stance that LoRA matches full FT for RL and small-data SL regimes; trained adapters are downloadable. The open Cookbook ships editable SL/RL/RLHF recipes to accelerate adoption. Availability: private beta with a waitlist; free to start, moving to usage-based pricing.

[Reasoning Model] ServiceNow AI Releases Apriel-1.5-15B-Thinker: An Open-Weights Multimodal Reasoning Model that Hits Frontier-Level Performance on a Single-GPU Budget. ServiceNow AI Research’s Apriel-1.5-15B-Thinker is a 15-billion-parameter, open-weights multimodal reasoning model trained via mid-training (continual pretraining) plus supervised fine-tuning—with no reinforcement learning—that achieves an Artificial Analysis Intelligence Index (AAI) score of 52 and discloses task results of AIME 2025 ≈88, GPQA Diamond ≈71, LiveCodeBench ≈73, Instruction-Following Benchmark 62, and Tau-squared Bench (Telecom) 68; it is built by depth-upscaling from Pixtral-12B-Base-2409, released under the MIT license on Hugging Face, and is engineered to run inference on a single GPU.

Editor’s Pick

You should not miss this one

[Open and Hybrid Model] IBM Released new Granite 4.0 Models with a Novel Hybrid Mamba-2/Transformer Architecture: Drastically Reducing Memory Use without Sacrificing Performance

IBM’s Granite 4.0 is an open-weights LLM family that swaps a monolithic Transformer for a hybrid Mamba-2/Transformer stack, cutting serving memory (IBM reports >70% reduction in long-context, concurrent inference) while maintaining instruction-following and tool-use quality. The lineup spans ~3B (Micro/H-Micro), ~7B total/~1B active (H-Tiny), and ~32B total/~9B active (H-Small) with BF16 checkpoints and official GGUF conversions for local runtimes. Models are Apache-2.0 licensed, cryptographically signed, and—per IBM—covered by an accredited ISO/IEC 42001 AI management system certification; distribution includes watsonx.ai, Hugging Face, Docker, LM Studio, NVIDIA NIM, Ollama, and Replicate. Benchmarks and specs are detailed in IBM’s launch notes and model cards.

4 Updates: Agents, MCP & Voice AI

[Anthropic Docs] Effective context engineering for AI agents

[Microsoft Agent Framework] Microsoft Announces Open-Source Agent Framework to Simplify AI Agent Development