AI Dev and Latest Releases

[Agentic + Open Source] Hugging Face Releases Smol2Operator: A Fully Open-Source Pipeline to Train a 2.2B VLM into an Agentic GUI Coder. Smol2Operator is a fully open-source, reproducible pipeline that upgrades SmolVLM2-2.2B-Instruct—a VLM with zero GUI grounding—into an agentic GUI coder via a two-phase SFT process. The release standardizes heterogeneous GUI action schemas into a unified API with normalized coordinates, provides transformed AGUVIS-based datasets, publishes training notebooks and preprocessing code, and ships a final checkpoint plus a demo Space. It targets process transparency and portability over leaderboard chasing, and slots into the smolagents runtime with ScreenEnv for evaluation, offering a practical blueprint for teams building small, operator-grade GUI agents

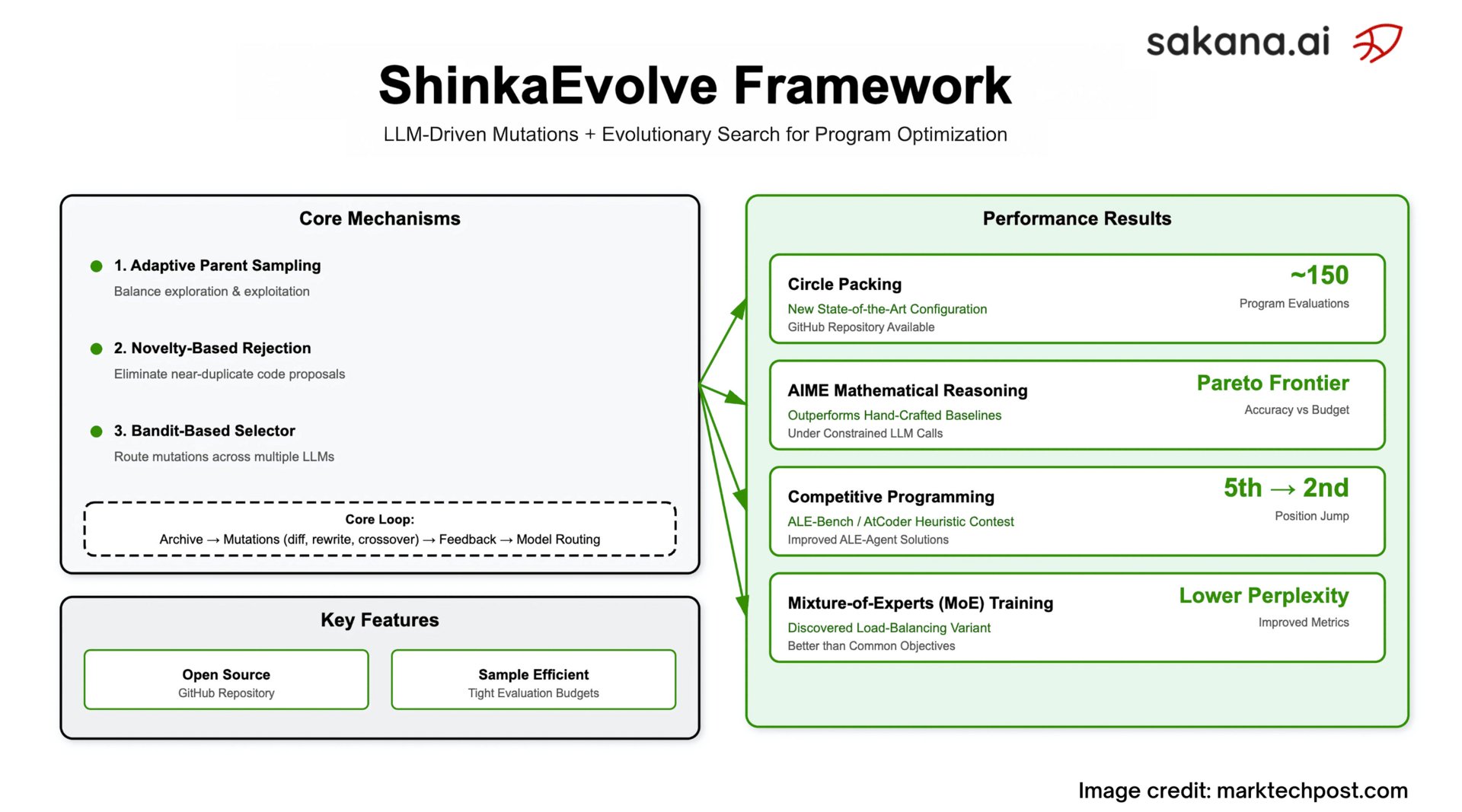

[Open Source] Sakana AI Released ShinkaEvolve: An Open-Source Framework that Evolves Programs for Scientific Discovery with Unprecedented Sample-Efficiency. ShinkaEvolve is an open-source framework that combines LLM-driven code mutations with evolutionary search and three efficiency controls—adaptive parent sampling, novelty-based rejection, and bandit-based model selection—to optimize programs under small evaluation budgets. It reports a new state-of-the-art circle-packing (n=26) configuration in ~150 evaluations; evolves AIME reasoning scaffolds along an accuracy-vs-LLM-calls Pareto frontier; improves ALE-Bench competitive-programming baselines (including a documented 5th→2nd shift on one task); and discovers a novel Mixture-of-Experts load-balancing loss that lowers perplexity and improves downstream metrics.

[Security + Open Source] Meet Qwen3Guard: The Qwen3-based Multilingual Safety Guardrail Models Built for Global, Real-Time AI Safety. Qwen3Guard is an open Qwen3-based safety stack with two modes—Gen (full-context generative classifier) and Stream (token-time moderation)—released in 0.6B/4B/8B sizes, supporting 119 languages and a three-tier risk taxonomy(Safe/Controversial/Unsafe). Stream attaches lightweight heads to score each generated token in real time for early blocking or routing, while Gen emits structured safety judgments suitable for RL reward modeling and dataset filtering. The team reports state-of-the-art F1 across English, Chinese, and multilingual safety benchmarks.....

[Agentic Robotics] Gemini Robotics 1.5: DeepMind’s ER↔VLA Stack Brings Agentic Robots to the Real World. Google DeepMind’s Gemini Robotics 1.5 splits embodied intelligence into ER 1.5 (planning/spatial reasoning, tool use, success/progress estimation) and a VLA action model (execution with “think-before-act” traces), enabling long-horizon tasks and web-informed plans. Motion transfer reuses skills across heterogeneous robots (ALOHA, Franka, Apptronik Apollo), reducing per-platform retraining. ER 1.5 is available in preview via the Gemini API; VLA access is limited to select partners. Public materials emphasize layered safety and upgraded ASIMOV evaluations.

[Inference Library] Meet oLLM: A Lightweight Python Library that brings 100K-Context LLM Inference to 8 GB Consumer GPUs via SSD Offload—No Quantization Required. It provides working examples for Llama-3 (1B/3B/8B), GPT-OSS-20B, and Qwen3-Next-80B (sparse MoE; ~3–3.9 B active params) with model-dependent long contexts (e.g., 100K for Llama-3; 50K shown for Qwen3-Next-80B) and README-reported footprints around 5–8 GB VRAM plus tens-to-hundreds of GB on SSD; throughput for the 80B MoE example is ~0.5 tok/s on an RTX 3060 Ti, which is practical for offline workloads but not interactive serving

Editor’s Pick

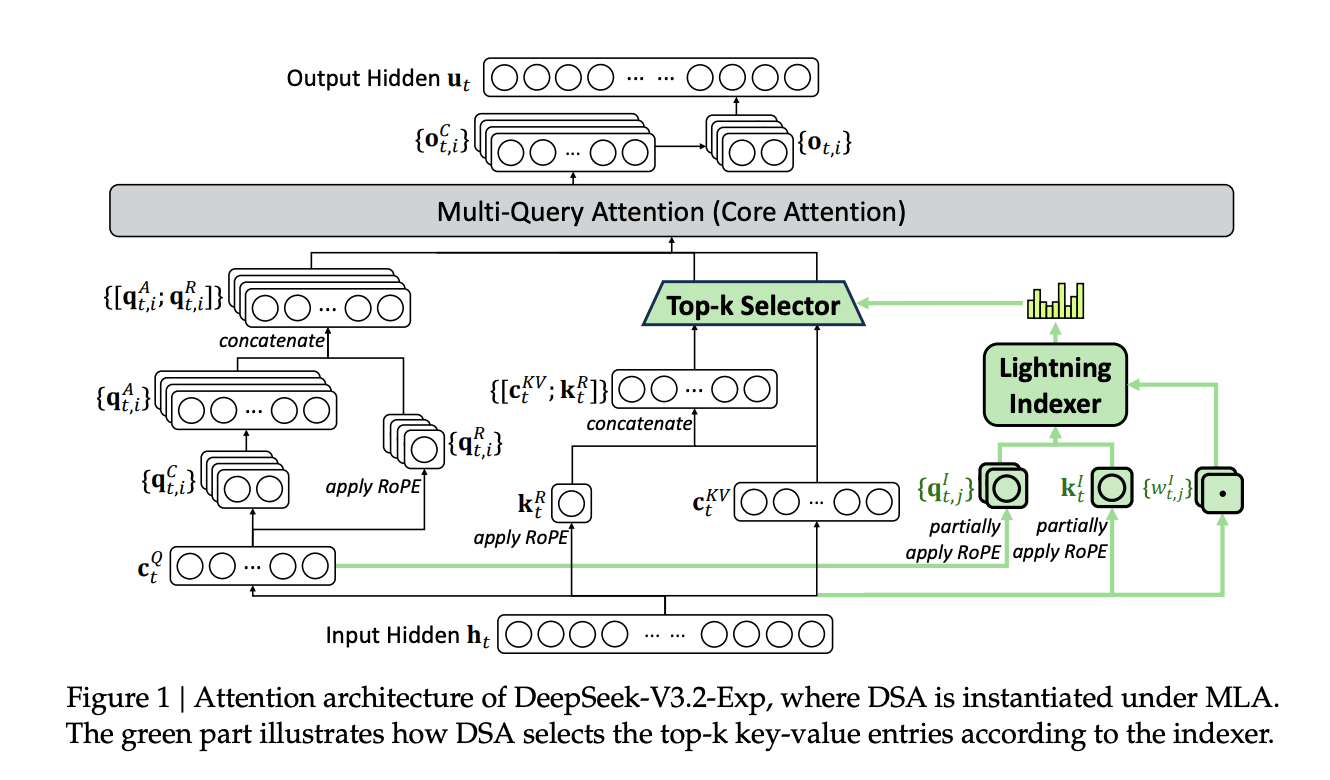

[Security+ Agentic] DeepSeek V3.2-Exp Cuts Long-Context Costs with DeepSeek Sparse Attention (DSA) While Maintaining Benchmark Parity

DeepSeek V3.2-Exp is an MIT-licensed, sparsified update to V3.1 that adds DeepSeek Sparse Attention (learned index-select-attend) to reduce long-context compute from O(L²) to O(L·k) while maintaining benchmark parity (e.g., MMLU-Pro ≈ 85.0). A lightweight FP8 indexer proposes a ~2,048-token subset per query, after which the core module attends only to that set; the indexer is trained to imitate dense attention via KL divergence. Officially, DeepSeek cut API prices by 50%+, with day-0 runtime support in vLLM and SGLang; larger social claims (~6× cheaper decode at 128k) remain to be independently replicated. Practically, it’s a drop-in A/B for RAG and long-document pipelines to lower decode costs without sacrificing quality.