AI Dev and Latest Releases

[Voice AI] Google Introduces Speech-to-Retrieval (S2R) Approach that Maps a Spoken Query Directly to an Embedding and Retrieves Information without First Converting Speech to Text. Google’s Speech-to-Retrieval (S2R) replaces the ASR→text→retrieval cascade with a dual-encoder system that maps spoken queries directly to audio embeddings and retrieves matching document embeddings; in production, it’s now powering Voice Search in multiple languages, where evaluations show S2R beating the cascade baseline and approaching an upper bound built from human-verified transcripts, while Google has released the Simple Voice Questions (SVQ) dataset (17 languages, 26 locales) under the Massive Sound Embedding Benchmark (MSEB) to standardize measurement.

[Upcoming- AI Live Webinar] Scaling AI with Haystack Enterprise: A Developer’s Guide. You’ll learn how to: Extend your expertise with direct access to the Haystack engineering team through private support and consultation hours. Deploy with confidence using Helm charts and best-practice guides for secure, scalable Kubernetes setups across cloud (e.g., AWS, Azure, GCP) or on-prem. Accelerate iteration with pre-built templates for everything from simple RAG pipelines to agents and multimodal workflows, complete with Hayhooks and Open WebUI. Stay ahead of threats with early access to enterprise-grade, security-focused features like prompt injection countermeasures. sponsored

[LLM] SwiReasoning: Entropy-Driven Alternation of Latent and Explicit Chain-of-Thought for Reasoning LLMs. SwiReasoning is a training-free, model-agnostic decoding controller that alternates between latent reasoning (silent, no token emission) and explicit chain-of-thought based on block-wise confidence estimated from next-token entropy trends, with a switch-count cap to curb overthinking; across mathematics/STEM suites it reports +1.5–2.8% average Pass@1 gains under unlimited tokens and +56–79% average token-efficiency improvements under constrained budgets, and reaches peak accuracy earlier on AIME’24/’25—shifting the accuracy–efficiency Pareto frontier without finetuning

[Thinking Model] Ant Research Group releases Ring-1T, the open-source trillion-parameter thinking model built on the Ling 2.0 architecture. Ant Research Group has open-sourced Ring-1T, a trillion-parameter “thinking” MoE model built on the Ling 2.0 architecture (1T total params, ~50B active) with up to 128K context. The release includes BF16 and FP8 variants on Hugging Face, with SGLang inference instructions, MIT licensing, and an FP8 weight drop for lower-precision deployments. Training used large-scale RL with verifiable rewards (RLVR) and RLHF, stabilized via the Icepop method and an in-house ASystem RL stack; reported evaluations cover math, coding, and reasoning benchmarks, plus case studies on IMO/ICPC tasks. Downloads and usage details are provided on the model card.

[Mamba Series] Mamba3 just silently dropped on ICLR. Mamba-3 is an “inference-first” state-space model (SSM) submitted to ICLR 2026 that strengthens linear-time sequence modeling along three axes: (1) quality via a higher-order trapezoidal discretization that subsumes the short convolution, (2) capability via a complex-valued state update equivalent to data-dependent RoPE that restores state-tracking on formal-language tasks, and (3) throughput via a multi-input multi-output (MIMO) SSM that raises arithmetic intensity and improves decode utilization. Pretrained on 100B FineWeb-Edu tokens and evaluated at 180M–1.5B scales, Mamba-3 matches or exceeds Mamba-2, Gated DeltaNet, and Transformer baselines on downstream language tasks, and advances the performance–efficiency Pareto frontier under a fixed inference budget, while still trailing attention on retrieval.

Editor’s Pick

You should not miss this one

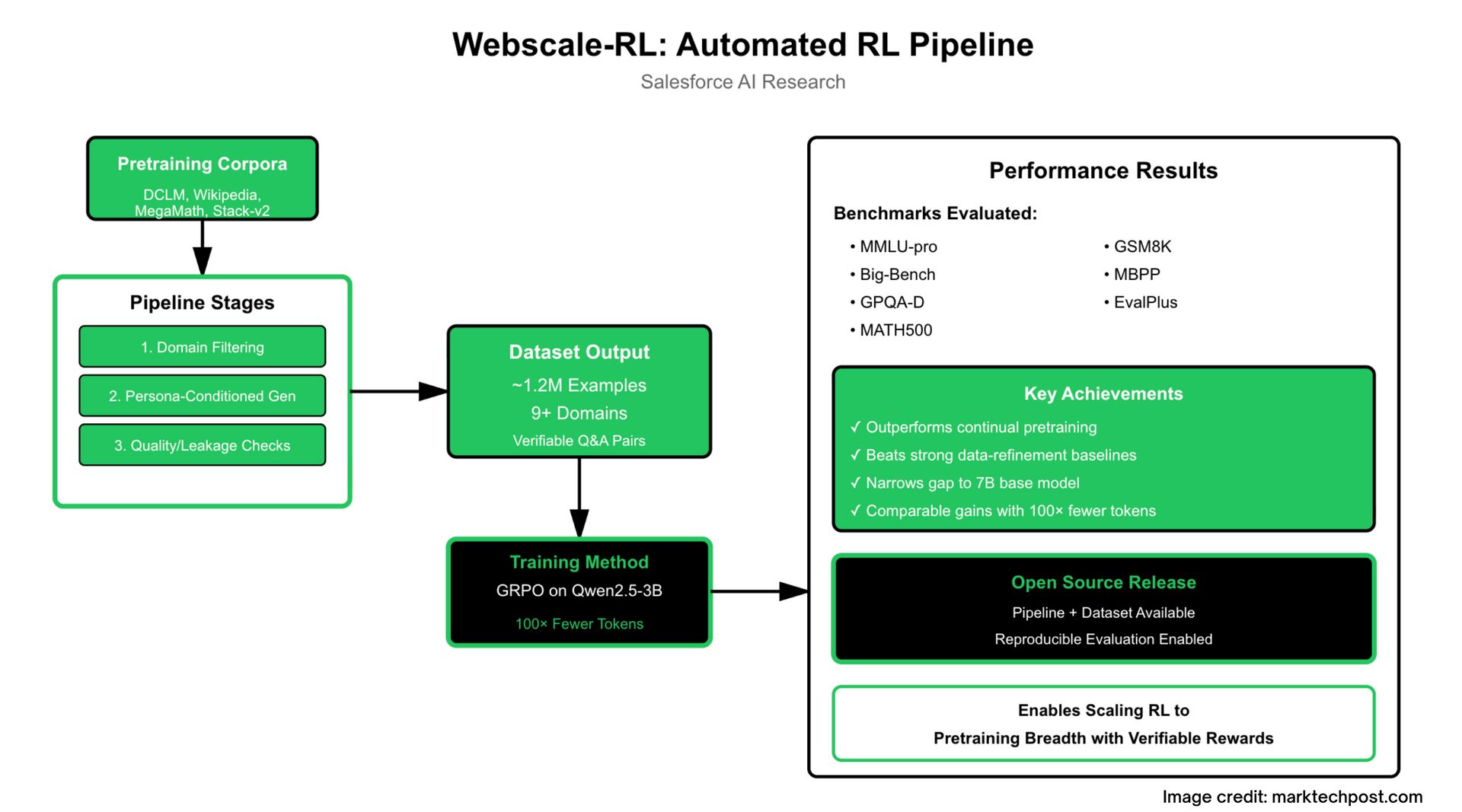

[RL] What if RL had pretraining-scale data? Now it does. Salesforce AI Research has introduced 𝗪𝗲𝗯𝘀𝗰𝗮𝗹𝗲-𝗥𝗟, a highly-scalable synthetic data pipeline and dataset that closes this gap by turning pre-training corpora into diverse RL simulation environments with millions of tasks. Webscale-RL, an automated pipeline that converts pretraining corpora (e.g., DCLM, Wikipedia, MegaMath, Stack-v2) into verifiable RL question–answer pairs via domain filtering, persona-conditioned generation, and multi-stage quality/leakage checks, yielding a ~1.2M-example dataset across 9+ domains. Using GRPO to train Qwen2.5-3B, RL on Webscale-RL outperforms continual pretraining and strong data-refinement baselines on MMLU-pro, Big-Bench, GPQA-D, MATH500, GSM8K, MBPP/EvalPlus, and narrowly closes the gap to the 7B base model, while achieving comparable gains with up to 100× fewer tokens. The pipeline and dataset are released to enable scaling RL to pretraining breadth with verifiable rewards and reproducible evaluation.