Here is your today’s AI Dev Brief from Marktechpost, covering core research, models, infrastructure tools, and applied updates for AI developers and researchers.

Meta AI Researchers Introduce Matrix: A Ray Native a Decentralized Framework for Multi Agent Synthetic Data Generation

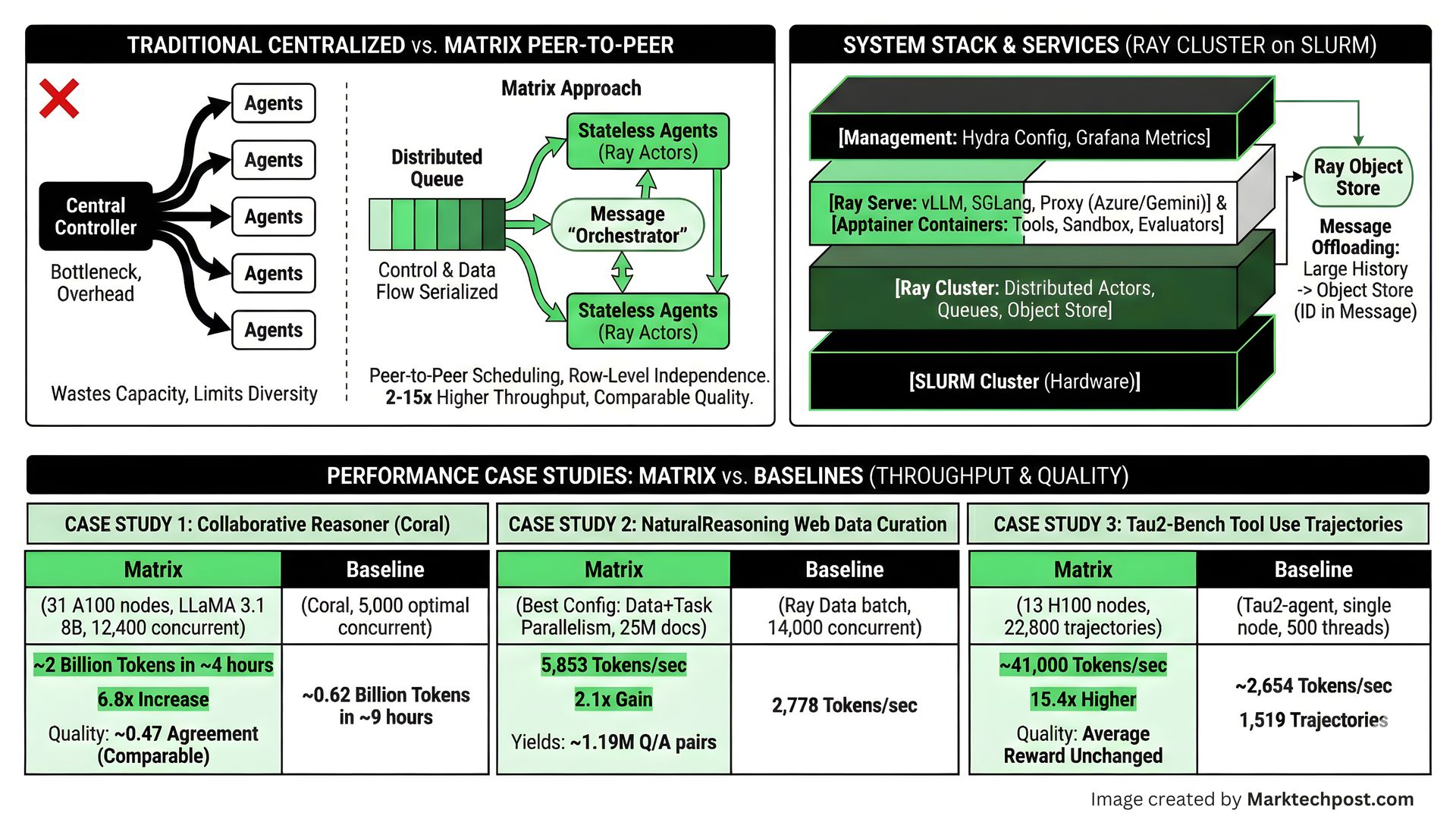

Matrix is a peer to peer multi agent framework from Meta for synthetic data generation that replaces a central orchestrator with serialized messages passed through distributed queues, runs on Ray with SLURM and open source LLM backends, and achieves about 2 to 15 times higher token throughput on workloads such as Collaborative Reasoner, NaturalReasoning and Tau2 Bench under the same hardware, while maintaining comparable output quality. Read the full insights/article here.

[Time Sensitive] MiniMax - Developer Ambassador Program Application (Sponsored)

MiniMax has opened applications for its Developer Ambassador Program, aimed at independent ML and LLM developers who are already building with MiniMax models. Ambassadors get access to upgraded or free plans, early access to new releases, direct channels to the product and R&D teams, and visibility for their work through the MiniMax community and events Check out the details.

DeepSeek Researchers Introduce DeepSeek-V3.2 and DeepSeek-V3.2-Speciale for Long Context Reasoning and Agentic Workloads

DeepSeek Researchers Introduce DeepSeek V3.2 and DeepSeek V3.2 Speciale as open, 685B parameter MoE models that combine DeepSeek Sparse Attention for near linear O(kL) long context scaling with a 128K context window, a GRPO based RL phase that uses more than 10% of pre training compute across math, code, reasoning and agent tasks, and an agent native tool protocol with thinking and non thinking modes, with V3.2 Speciale reaching gold medal level performance on IMO, CMO, IOI and ICPC World Finals. Read the full insights/article here.

MiniMax-M2: Technical Deep Dive into Interleaved Thinking for Agentic Coding Workflows

MiniMax-M2 is a new Mixture-of-Experts (MoE) model designed specifically for agentic coding workflows that claims to cut costs by over 90% compared to Claude 3.5 Sonnet while doubling inference speed. The model distinguishes itself with an "Interleaved Thinking" architecture—a dynamic Plan → Act → Reflect loop that allows it to self-correct and preserve state during complex tasks rather than relying on a linear, front-loaded plan. With 230B total parameters (but only 10B active per token), MiniMax-M2 aims to deliver the reasoning depth of a large model with the low latency required for real-time tools like Cursor and Cline, offering a significant efficiency upgrade for developers building autonomous agents. Read the full insights/article here.

Project Notebooks/Tutorials

▶ [Open Source] Rogue: An Open-Source AI Agent Evaluator worth trying Codes & Examples

▶ How to Design an Advanced Multi-Page Interactive Analytics Dashboard with Dynamic Filtering, Live KPIs, and Rich Visual Exploration Using Panel Codes Tutorial

▶ A Coding Guide to Design an Agentic AI System Using a Control-Plane Architecture for Safe, Modular, and Scalable Tool-Driven Reasoning Workflows Codes Tutorial

▶ A Coding Implementation for an Agentic AI Framework that Performs Literature Analysis, Hypothesis Generation, Experimental Planning, Simulation, and Scientific Reporting Codes Tutorial