Here is your today’s AI Dev Brief from Marktechpost, covering core research, models, infrastructure tools, and applied updates for AI developers and researchers.

NVIDIA AI Released Nemotron Speech ASR: A New Open Source Transcription Model Designed from the Ground Up for Low-Latency Use Cases like Voice Agents

NVIDIA has released Nemotron Speech ASR, a 0.6B parameter English streaming model that uses a cache aware FastConformer RNNT architecture to deliver sub 100 ms ASR latency with about 7.2 to 7.8 percent WER across standard benchmarks, while scaling to 3 times more concurrent streams than buffered baselines on H100 GPUs. Deployed alongside Nemotron 3 Nano 30B and Magpie TTS, it enables voice agents with around 24 ms median time to final transcription and roughly 500 ms server side voice to voice latency, and is available as a NeMo checkpoint under the NVIDIA Permissive Open Model License for fully self hosted low latency speech stacks.... Read the full analysis/article here.

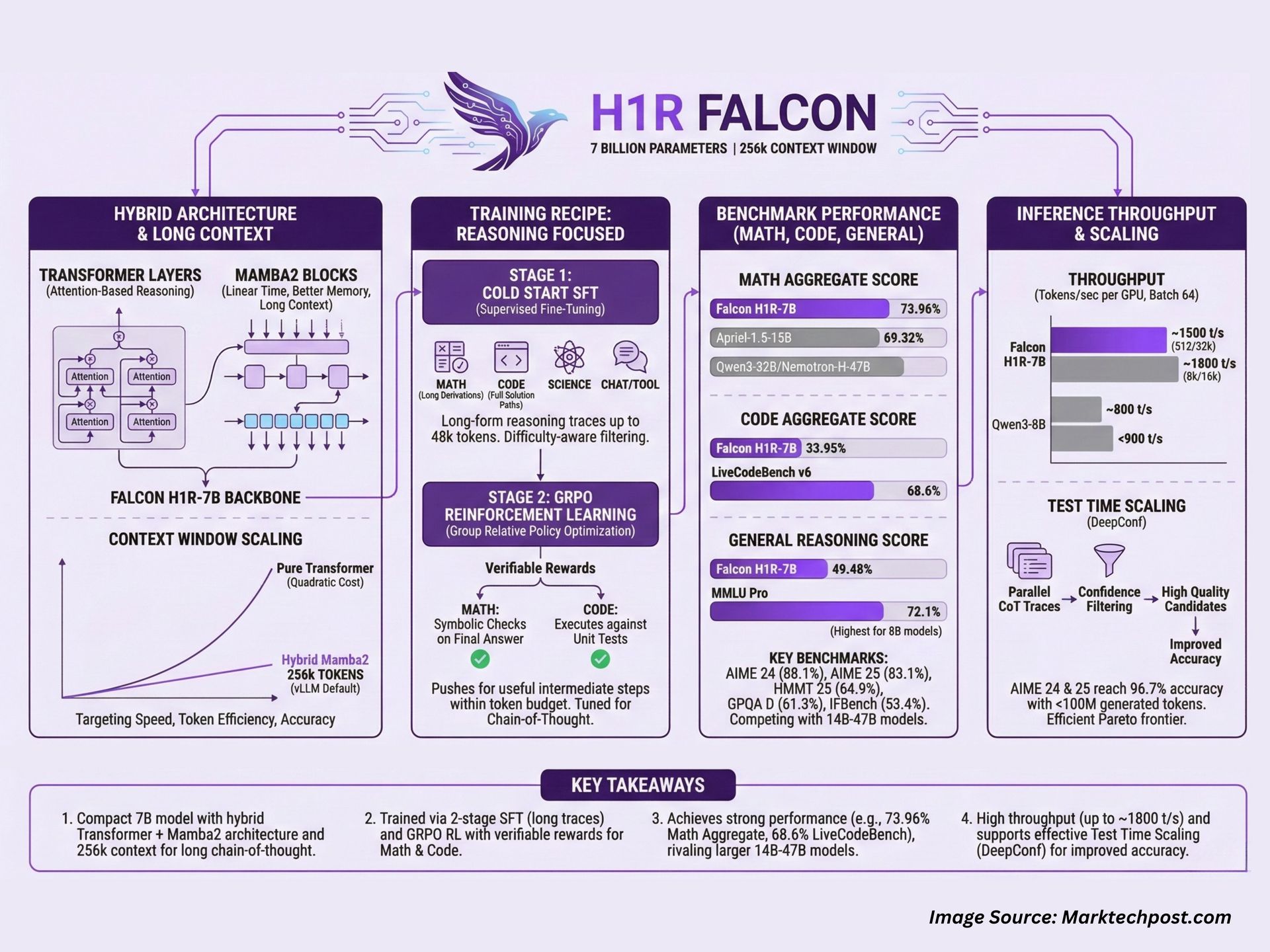

TII Abu-Dhabi Released Falcon H1R-7B: A New Reasoning Model Outperforming Others in Math and Coding with only 7B Params with 256k Context Window

Falcon H1R 7B is a 7B parameter reasoning focused model from TII that combines a hybrid Transformer plus Mamba2 architecture with a 256k token context window, and a two stage training pipeline of long form supervised fine tuning and GRPO based RL, to deliver near frontier level math, coding and general reasoning performance, including strong scores such as 88.1 percent on AIME 24, 83.1 percent on AIME 25, 68.6 percent on LiveCodeBench v6 and 72.1 percent on MMLU Pro, while maintaining high throughput in the 1,000 to 1,800 tokens per second per GPU range and support for test time scaling with Deep Think with confidence, making it a compact but capable backbone for math tutors, code assistants and agentic systems.... Read the full analysis/article here.

Liquid AI Releases LFM2.5: A Compact AI Model Family For Real On Device Agents

LFM2.5 is Liquid AI’s new 1.2 billion parameter model family for real on device agents, extending pretraining to 28 trillion tokens and adding supervised fine tuning, preference alignment, and multi stage reinforcement learning across text, Japanese, vision language, and audio workloads, while shipping open weights and ready to use deployments for llama dot cpp, MLX, vLLM, ONNX, LEAP..... Read the full analysis/article here.

Project Notebooks/Tutorials

▶ [Open Source] Rogue: An Open-Source AI Agent Evaluator worth trying Codes & Examples

▶ How to Design an Agentic AI Architecture with LangGraph and OpenAI Using Adaptive Deliberation, Memory Graphs, and Reflexion Loops Codes Tutorial

Partner with us

Looking to promote your company, product, service, or event to 1 Million+ monthly AI developers and readers?